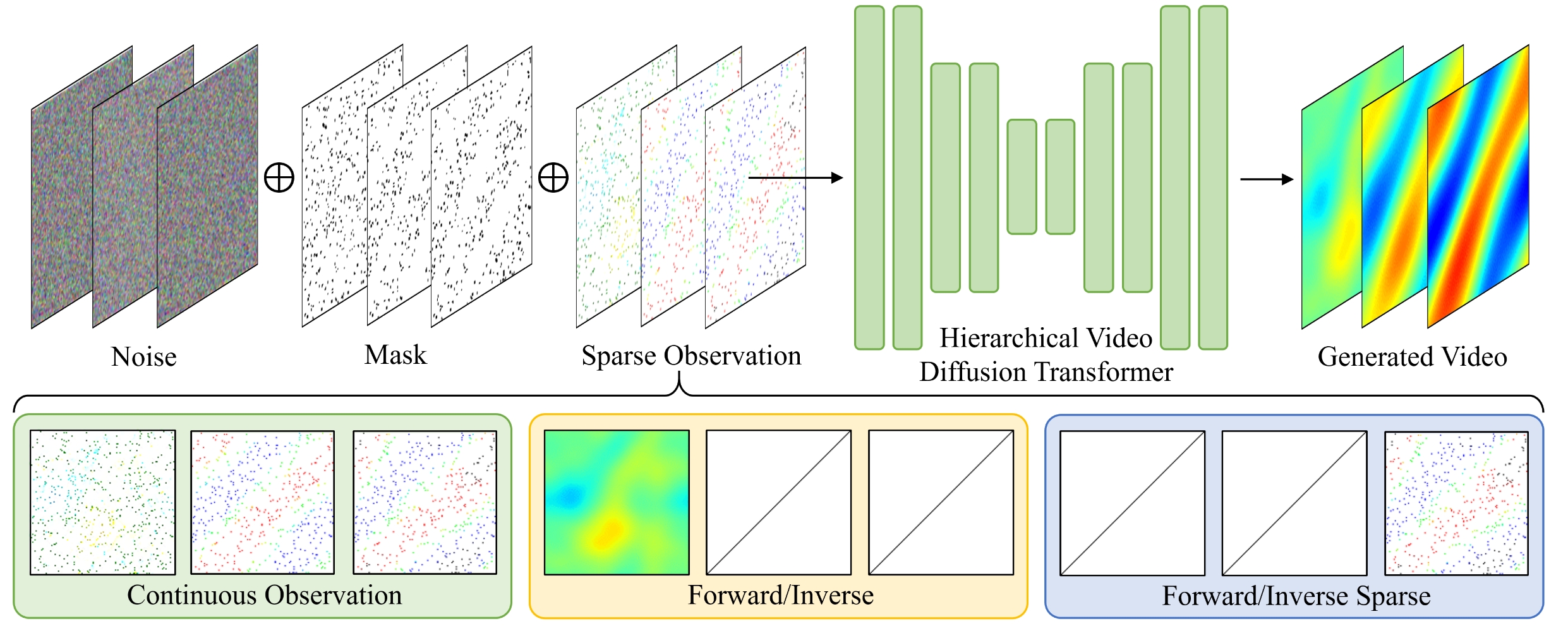

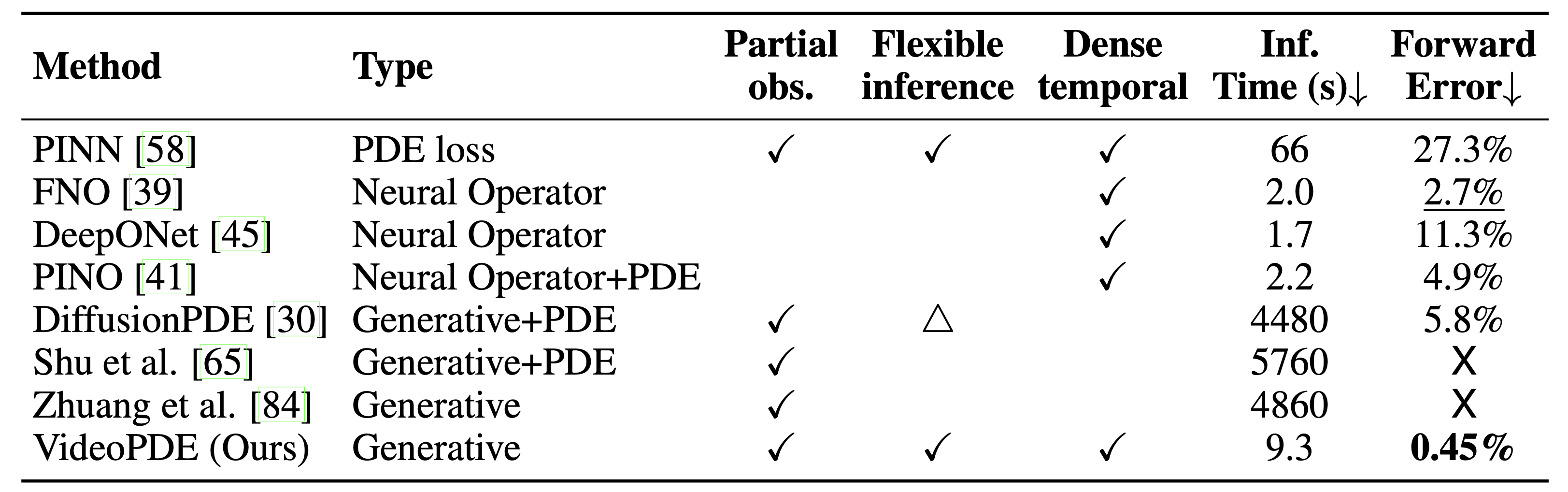

We present a unified framework for solving partial differential equations (PDEs) using

video-inpainting diffusion transformer models. Unlike existing methods that devise

specialized strategies for either forward or inverse problems under full or partial

observation, our

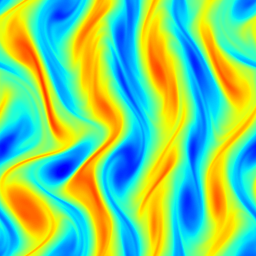

approach unifies these tasks under a single, flexible generative framework. Specifically, we

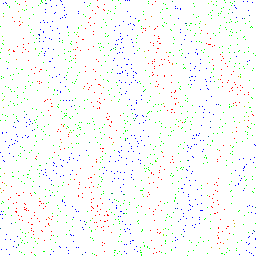

recast PDE-solving as a generalized inpainting problem, e.g., treating forward prediction as

inferring missing spatiotemporal information of future states from initial conditions. To

this end, we design a transformer-based architecture that conditions on arbitrary patterns

of known data to infer missing values across time and space. Our method proposes pixel-space

video diffusion models for fine-grained, high-fidelity inpainting and conditioning, while

enhancing computational efficiency through hierarchical modeling. Extensive experiments show

that our video inpainting-based diffusion model offers an accurate and versatile solution

across a wide range of PDEs and problem setups, outperforming state-of-the-art baselines.